ML: PINN代码-pitzDaily流动 (适配FVM网格)

本文在ML: PINN代码实例-顶盖驱动流 (NS方程) 的基础上,增加OpenFOAM网格适配功能。主要是代码可以自动读取OpenFOAM网格。因此对于任意的OpenFOAM算例,可以直接进行训练。但是需要注意:

目前的代码只支持2D,也就是说NS方程只是植入的二维方程;

数据损失部分是硬植入,因此需要用户去更改代码自定义;

PINN训练的时候,PINN所需要的网格可能跟FVM的有区别,在这种情况下,PINN网格与FVM网格不一致,则不适配与本代码(本代码PINN网格与FVM网格完全一致);

由于代码量过大,不对其进行介绍。建议系统学习OpenFOAM以及libtorch后再来理解。

// pitzDaily flow predicted by PINN, by dyfluid.com

#include <torch/torch.h>

#include "fvCFD.H"

// 神经网络头文件

struct ANN

:

torch::nn::Cloneable<ANN>

{

torch::nn::Sequential net_;

int n_;

int l_;

int ipNum_;

int opNum_;

public:

ANN(int n, int l, int ipNum, int opNum)

:

n_(n),//neuron number

l_(l),//layer number

ipNum_(ipNum),//input number

opNum_(opNum)//output number

{

reset();

}

void reset() override

{

net_ = torch::nn::Sequential();

// input layers

net_->push_back(torch::nn::Linear(ipNum_, n_));

net_->push_back(torch::nn::Tanh());

// hidden layers

for (int i = 0; i < l_; i++)

{

net_->push_back(torch::nn::Linear(n_, n_));

net_->push_back(torch::nn::Tanh());

}

// output layer

net_->push_back(torch::nn::Linear(n_, opNum_));

register_module("net", net_);

}

auto forward(torch::Tensor x)

{

return net_->forward(x);

}

};

int main(int argc, char *argv[])

{

#include "setRootCaseLists.H"

#include "createTime.H"

#include "createMesh.H"

// 必要的神经网络参数

IOdictionary trainingProperties

(

IOobject

(

"trainingProperties",

runTime.constant(),

mesh,

IOobject::MUST_READ_IF_MODIFIED,

IOobject::NO_WRITE

)

);

// network

int iter = trainingProperties.lookup<int>("iter");

double lr = trainingProperties.lookup<double>("learningRate");

int NNnum = trainingProperties.lookup<int>("numberOfNeu");

int layers = trainingProperties.lookup<int>("numberOfLayers");

int ipNum = trainingProperties.lookup<int>("inputNumber");

int opNum = trainingProperties.lookup<int>("outputNumber");

// Outer iterations

bool CFD = trainingProperties.lookupOrDefault<bool>("CFD", false);

bool training = trainingProperties.lookupOrDefault<bool>("training", false);

int BATCH = trainingProperties.lookupOrDefault<int>("batch", 0);

int CHANNEL = trainingProperties.lookupOrDefault<int>("channel", 0);

torch::manual_seed(0);

auto net = std::make_shared<ANN>(NNnum, layers, ipNum, opNum);

auto crit = torch::nn::MSELoss();

torch::optim::AdamW opti(net->parameters(), torch::optim::AdamWOptions(lr));

ifstream file;

file.open("net.pth");

if (file)

{

Info<< "Netword exists, reading..." << endl;

torch::load(net, "net.pth");

}

else

{

Info<< "File does not exists, train from scratch"

<< endl;

}

// 流体流动相关参数,训练权重等

const dictionary& pinnProperties = trainingProperties.subDict("pinnProperties");

double nu = readScalar(pinnProperties.lookup("nu"));

double vel = readScalar(pinnProperties.lookup("inletU"));

double w_data = readScalar(pinnProperties.lookup("weightsDATA"));

double w_bd = readScalar(pinnProperties.lookup("weightsBD"));

double w_pde = readScalar(pinnProperties.lookup("weightsPDE"));

// OpenFOAM网格与libtorch适配

vectorField ofMesh = mesh.C();

auto meshTorch = torch::full({ofMesh.size(),1,2}, 0.0);

forAll(ofMesh, i)

{

double x = ofMesh[i].x();

double y = ofMesh[i].y();

auto posi = torch::tensor({x,y}).reshape({1,2});

meshTorch[i] = posi;

}

const surfaceVectorField& Cf = mesh.Cf();

const surfaceVectorField& Sf = mesh.Sf();

const surfaceScalarField& magSf = mesh.magSf();

label inletID = mesh.boundaryMesh().findPatchID("inlet");

label outletID = mesh.boundaryMesh().findPatchID("outlet");

label wallID = mesh.boundaryMesh().findPatchID("wall");

vectorField cfInlet = Cf.boundaryField()[inletID];

vectorField cfOutlet = Cf.boundaryField()[outletID];

vectorField cfWall = Cf.boundaryField()[wallID];

vectorField nWall =

Sf.boundaryField()[wallID]/magSf.boundaryField()[wallID];

auto inlet = torch::full({cfInlet.size(),1,2}, 0.0);

auto outlet = torch::full({cfOutlet.size(),1,2}, 0.0);

auto wall = torch::full({cfWall.size(),1,2}, 0.0);

auto nlx = torch::full({cfWall.size()}, 0.0);

auto nly = torch::full({cfWall.size()}, 0.0);

forAll(cfInlet, i)

{

double x = cfInlet[i].x();

double y = cfInlet[i].y();

auto posi = torch::tensor({x,y}).reshape({1,2});

inlet[i] = posi;

}

forAll(cfWall, i)

{

double x = cfWall[i].x();

double y = cfWall[i].y();

auto posi = torch::tensor({x,y}).reshape({1,2});

wall[i] = posi;

}

forAll(cfOutlet, i)

{

double x = cfOutlet[i].x();

double y = cfOutlet[i].y();

auto posi = torch::tensor({x,y}).reshape({1,2});

outlet[i] = posi;

}

forAll(cfWall, i)

{

nlx[i] = nWall[i].x();

nly[i] = nWall[i].y();

}

// PINN代码正文在这里开始

meshTorch = meshTorch.reshape({-1,2});

inlet = inlet.reshape({-1,2});

outlet = outlet.reshape({-1,2});

wall = wall.reshape({-1,2});

auto u_inlet = torch::full({cfInlet.size()}, vel);

auto v_inlet = torch::full({cfInlet.size()}, 0.0);

meshTorch.requires_grad_(true);

inlet.requires_grad_(true);

outlet.requires_grad_(true);

wall.requires_grad_(true);

// 数据损失点1

auto dataPosi = torch::full({9,1,2}, 0.0);

dataPosi[0] = torch::tensor({-0.02 , 0.012}).reshape({1,2});

dataPosi[1] = torch::tensor({-0.018, 0.012}).reshape({1,2});

dataPosi[2] = torch::tensor({-0.016, 0.012}).reshape({1,2});

dataPosi[3] = torch::tensor({-0.014, 0.012}).reshape({1,2});

dataPosi[4] = torch::tensor({-0.012, 0.012}).reshape({1,2});

dataPosi[5] = torch::tensor({-0.01 , 0.012}).reshape({1,2});

dataPosi[6] = torch::tensor({0.0 , 0.012}).reshape({1,2});

dataPosi[7] = torch::tensor({0.1 , 0.012}).reshape({1,2});

dataPosi[8] = torch::tensor({0.2 , 0.012}).reshape({1,2});

dataPosi = dataPosi.reshape({-1,2});

if (torch::cuda::is_available())

{

std::cout<< "Running on GPU" << std::endl;

//

}

else

{

std::cout<< "Running on CPU" << std::endl;

}

for (int i = 0; i < iter; i++)

{

opti.zero_grad();

auto UP = net->forward(meshTorch);

auto UPinlet = net->forward(inlet);

auto UPoutlet = net->forward(outlet);

auto UPwall = net->forward(wall);

auto UPdata = net->forward(dataPosi);

auto u_pred = UP.index({torch::indexing::Slice(), 0});

auto v_pred = UP.index({torch::indexing::Slice(), 1});

auto p_pred = UP.index({torch::indexing::Slice(), 2});

auto u_pred_inlet = UPinlet.index({torch::indexing::Slice(), 0});

auto v_pred_inlet = UPinlet.index({torch::indexing::Slice(), 1});

auto p_pred_inlet = UPinlet.index({torch::indexing::Slice(), 2});

auto u_pred_outlet = UPoutlet.index({torch::indexing::Slice(), 0});

auto v_pred_outlet = UPoutlet.index({torch::indexing::Slice(), 1});

auto p_pred_outlet = UPoutlet.index({torch::indexing::Slice(), 2});

auto u_pred_wall = UPwall.index({torch::indexing::Slice(), 0});

auto v_pred_wall = UPwall.index({torch::indexing::Slice(), 1});

auto p_pred_wall = UPwall.index({torch::indexing::Slice(), 2});

auto u_pred_data = UPdata.index({torch::indexing::Slice(), 0});

auto v_pred_data = UPdata.index({torch::indexing::Slice(), 1});

auto p_pred_data = UPdata.index({torch::indexing::Slice(), 2});

auto dpdMesh =

torch::autograd::grad

(

{p_pred},

{meshTorch},

{torch::ones_like(p_pred)},

true,

true

)[0];

auto dpdx = dpdMesh.index({torch::indexing::Slice(), 0});

auto dpdy = dpdMesh.index({torch::indexing::Slice(), 1});

auto dpdxMesh =

torch::autograd::grad

(

{dpdx},

{meshTorch},

{torch::ones_like(dpdx)},

true,

true

)[0];

auto dpdyMesh =

torch::autograd::grad

(

{dpdy},

{meshTorch},

{torch::ones_like(dpdy)},

true,

true

)[0];

auto dpdxx = dpdxMesh.index({torch::indexing::Slice(), 0});

auto dpdyy = dpdyMesh.index({torch::indexing::Slice(), 1});

auto dudMesh =

torch::autograd::grad

(

{u_pred},

{meshTorch},

{torch::ones_like(u_pred)},

true,

true

)[0];

auto dudx = dudMesh.index({torch::indexing::Slice(), 0});

auto dudy = dudMesh.index({torch::indexing::Slice(), 1});

auto dvdMesh =

torch::autograd::grad

(

{v_pred},

{meshTorch},

{torch::ones_like(v_pred)},

true,

true

)[0];

auto dvdx = dvdMesh.index({torch::indexing::Slice(), 0});

auto dvdy = dvdMesh.index({torch::indexing::Slice(), 1});

auto dudxMesh =

torch::autograd::grad

(

{dudx},

{meshTorch},

{torch::ones_like(dudx)},

true,

true

)[0];

auto dudxx = dudxMesh.index({torch::indexing::Slice(), 0});

auto dudyMesh =

torch::autograd::grad

(

{dudy},

{meshTorch},

{torch::ones_like(dudy)},

true,

true

)[0];

auto dudyy = dudyMesh.index({torch::indexing::Slice(), 1});

auto dudxy = dudyMesh.index({torch::indexing::Slice(), 0});

auto dvdxMesh =

torch::autograd::grad

(

{dvdx},

{meshTorch},

{torch::ones_like(dvdx)},

true,

true

)[0];

auto dvdyMesh =

torch::autograd::grad

(

{dvdy},

{meshTorch},

{torch::ones_like(dvdy)},

true,

true

)[0];

auto dvdxx = dvdxMesh.index({torch::indexing::Slice(), 0});

auto dvdxy = dvdxMesh.index({torch::indexing::Slice(), 1});

auto dvdyy = dvdyMesh.index({torch::indexing::Slice(), 1});

auto dpdWall =

torch::autograd::grad

(

{p_pred_wall},

{wall},

{torch::ones_like(p_pred_wall)},

true,

true

)[0];

auto dpdx_wall = dpdWall.index({torch::indexing::Slice(), 0});

auto dpdy_wall = dpdWall.index({torch::indexing::Slice(), 1});

auto dpdInlet =

torch::autograd::grad

(

{p_pred_inlet},

{inlet},

{torch::ones_like(p_pred_inlet)},

true,

true

)[0];

auto dpdx_inlet = dpdInlet.index({torch::indexing::Slice(), 0});

auto dudOutlet =

torch::autograd::grad

(

{u_pred_outlet},

{outlet},

{torch::ones_like(u_pred_outlet)},

true,

true

)[0];

auto dudx_outlet = dudOutlet.index({torch::indexing::Slice(), 0});

auto cont1 = dudx + dvdy;

auto mom1 = 2.0*u_pred*dudx + v_pred*dudy + u_pred*dvdy

- nu*(dudxx + dudyy) - nu*(dudxx + dvdxy) + dpdx;

auto mom2 = u_pred*dvdx + v_pred*dudx + 2.0*v_pred*dvdy

- nu*(dvdxx + dvdyy) - nu*(dudxy + dvdyy) + dpdy;

auto loss_cont1 = 100*crit(cont1, 0*cont1);

auto loss_mom1 = crit(mom1, 0*mom1);

auto loss_mom2 = crit(mom2, 0*mom2);

auto loss_wall =

crit(u_pred_wall, 0*u_pred_wall)

+ crit(v_pred_wall, 0*v_pred_wall)

+ crit(dpdx_wall*nlx + dpdy_wall*nly, 0*dpdx_wall);

auto loss_inlet =

crit(u_pred_inlet, u_inlet)

+ crit(v_pred_inlet, v_inlet)

+ crit(dpdx_inlet, 0*dpdx_inlet);

auto loss_outlet =

crit(p_pred_outlet, 0*p_pred_outlet)

+ crit(dudx_outlet, 0*dudx_outlet);

// 数据损失点2

auto u_data = torch::clone(u_pred_data);

u_data[0] = 0.100576;

u_data[1] = 0.105416;

u_data[2] = 0.119608;

u_data[3] = 0.126239;

u_data[4] = 0.136212;

u_data[5] = 0.139621;

u_data[6] = 0.144101;

u_data[7] = 0.059066;

u_data[8] = 0.059812;

auto v_data = torch::clone(v_pred_data);

v_data[0] = 0.00104886;

v_data[1] = 0.00234075;

v_data[2] = 0.00260724;

v_data[3] = 0.00198026;

v_data[4] = 6.03697e-05;

v_data[5] = -0.00107153;

v_data[6] = -0.0160208;

v_data[7] = -0.00029105;

v_data[8] = -0.00081997;

auto p_data = torch::clone(p_pred_data);

p_data[0] = 0.136748;

p_data[1] = 0.138309;

p_data[2] = 0.138134;

p_data[3] = 0.136637;

p_data[4] = 0.132631;

p_data[5] = 0.130444;

p_data[6] = 0.112201;

p_data[7] = 0.066805;

p_data[8] = 0.042315;

auto loss_data =

w_data*(crit(u_pred_data, u_data) + crit(v_pred_data, v_data));

auto loss_boundary = w_bd*(loss_inlet + loss_wall + loss_outlet);

auto loss_pde = w_pde*(loss_cont1 + loss_mom1 + loss_mom2);

auto loss = loss_pde + loss_boundary + loss_data;

loss.backward();

opti.step();

if (i % 100 == 0)

{

std::cout<< i

<< ": pde: " << loss_pde.item<double>()

<< ": data: " << loss_data.item<double>()

<< ", boundary: " << loss_boundary.item<double>()

<< ", cont: " << loss_cont1.item<double>()

<< ", momx: " << loss_mom1.item<double>()

<< ", momy: " << loss_mom2.item<double>()

<< ", All: " << loss.item<double>()

<< std::endl;

std::ofstream meshTorchfile("mesh");

meshTorchfile << meshTorch << "\n";

std::ofstream ufile("up");

ufile << UP << "\n";

torch::save(net, "net.pth");

}

}

return 0;

}

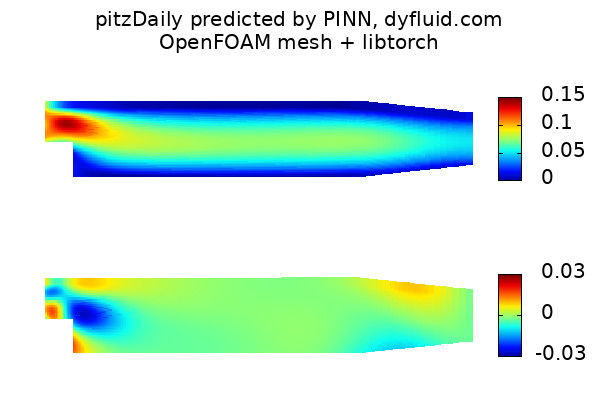

该代码运行后,会自动输出结果文件。可以参考ML: PINN代码实例-顶盖驱动流 (NS方程) 来生成图片。相应的结果如下:

这个算例训练了非常长的时间,几点特征:

如果采用原生的NS方程,且采用FVM同样的网格点,FVM大概2秒算完,PINN训练大约需要30小时(GPU),且还没有训练结束。如果采用CPU训练,会特别慢;

建议调用拓展的NS方程,例如将连续性方程转化为流动的势函数,研究能否加速训练;

建议生成适配pitzDaily网格的配点,研究能否加速训练;